AI's Reputation Problem 2: Revengeance

This is an update to a something I wrote a year ago: AI's Reputation Problem.

For the last week or so, my wife and I have been sitting on a beach in Mexico, catching up on our summer reading lists. What great timing for us when the Chicago Sun-Times Summer Reading list came out! If you don't already know what's, happened, here's the tl;dr - the author of this list, rather than doing his own independent research and review opted to use ChatGPT instead, which predictably, hallucinated a number of book titles and blurbs that don't exist. 404 Media broke the story, and The Atlantic has a great explainer on how it all happened.

But what I want to talk about here is that this isn't inherently a problem because an LLM was used. Obviously, we know that by definition, AI/LLM tools cannot do work in good faith, given that they were trained on stolen and un-consented data, with tech companies going so far as to say that "requiring tech companies to ask permission to train Al on copyrighted work would 'kill the industry'." What does this tell you about the industry then? But this beside the point (although, not really?) of what I wanted to originally discuss.

As Brenna Clark Gray put it,

"This is not helping my gut feeling that everyone who claims they thoroughly check their AI outputs is lying (because if they did it would negate all efficiencies). What chance the “only” time it wasn’t checked is the time all the errors are so obvious? And no one will learn anything from this."

This is actually a problem that can be told in three parts:

PIVOT TO AI

If you were online in 2015, you may have seen many news organizations doing something called "pivot to video." This was basically a trend started by Meta (by juicing their video playback stats to drive more engagement to their perpetually dying platform, Facebook) to have newsrooms cut staffing resources for written content in favor of short form video, often hosted on third-party platforms like YouTube or notoriously, Facebook Video. Our current "Pivot to AI" mirrors this decade old meme in that the current embrace of AI in newsrooms has lead to widespread layoffs (unfortunately layoffs seem to be a tried and true way to juice a company's stock up and to the right) in favour of cheaper alternatives, i.e. freelance + LLMs. This inherently means prioritizing quick publishing and "efficiency" (whatever that means in the context of news reporting) over good-faith editing and fact-checking. All of this is to say that the obvious end result is a significant degradation in content quality and an increased risk of spreading unverified information due to unchallenged LLM hallucinations.

SELF-SABOTAGE

Google's pursuit of ad-driven clicks has meant they've intentionally neutered their former flagship product, Search. What used to work kind of magically has now become an ad-infested obstacle course, pushing frustrated users away from what used to be the gold standard to say, "fuck this, let's see what the Chatbot has to say." Making LLMs look attractive in comparison to Search is a natural outcome, but one that was absolutely engineered by profit motives (see the end of ZIRP as the big reason for this overnight pivot to AI). Want more evidence? Watch how many times Google mentioned Gemini during their annual I/O developers conference.

PUBLIC PERCEPTION

Companies that push LLM products have muddied the water of public understanding so thoroughly, that we now live in a world of over-confident over-reliant use of these tools and their output, regardless of how utterly stripped of context they are (Gemini AI Mode in Search). This, combined with the lack of resources to verify and the profit motive means we now live in a world with an unchecked spread of obvious hallucinations. Without proper vetting, these errors can (and already are) spreading through the Fourth Estate (who themselves now rely heavily on outsourced and freelanced articles) to an unsuspecting and largely uncritical audience who may, and often do, accept AI-generated content as factual. And I mean, I don't blame people for this because they've been lied to about the promise of AI/LLMs constantly over the last three years or so.

TL;DR

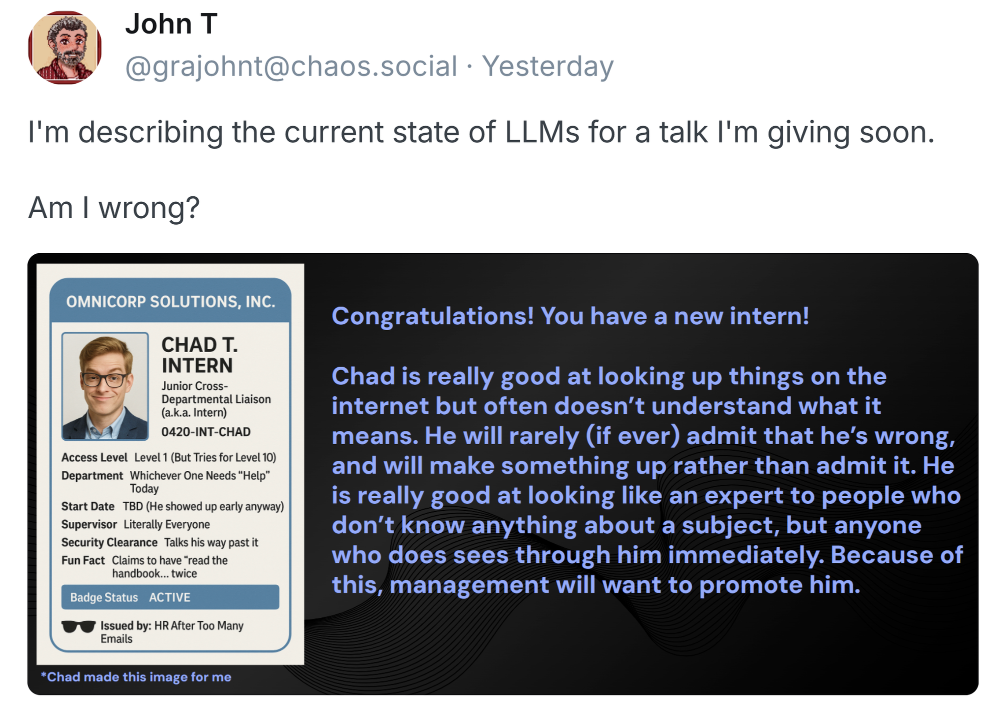

The problem with the intrusion of LLMs is not that it gets things wrong nor that it's everywhere (those are serious problems that I don't have the answer to), but rather it's that it forces everyone to ask what is real, making it harder to distinguish what even is actually real in the first place. This is because LLMs cannot distinguish fact from fiction, and are not designed to tell the truth. In the same way that Duolingo's explicitly stated goal is not to teach you new languages but to draw your attention away from other apps like TikTok (hello, attention economy), these LLMs are designed to keep you engaged by constantly, "Yes and-ing" you so you keep using them. They are designed to persuade, yet they are implemented in sectors where truth and a keen attention to factual details matter, i.e. democracy, education, science, health, media, law, (and perhaps most grossly, the prison system), etc.

Completely setting aside the ethics of using LLMs and how they're trained, the bias inherent in the models themselves, the vast environmental harm they pose, and the hype (take for example, Apple's continued delayed updates to Siri to make it usable).that makes it almost impossible for any casual user not in the know to discern their true capabilities for a moment, the issue in this specific situation is less about what they can do in an ideal world (which we do not currently live in), it's how and what people and companies will end up using these tools for. The Chicago Sun-Times debacle is a a great preview for how hallucinations and sycophancy are not unintended consequences of AI; these are features, not bugs.

In my professional life, I've found exactly one use-case where an LLM sometimes is a time-saving tool. However, in my personal life anytime I've tried using a tool for writing, I more often than not spend so much time editing and revising the output that I inevitably throw my hands in the air and just write the thing myself faster and less verbose. Writing is something I love and brings genuine joy in my life; I cannot imagine trying to actively supplant that with something that doesn't understand the connections between words and their meaning (let alone not bothering to proof-read and remove LLM prompts from your personal writing), especially when said tool can so easily be manipulated into spreading anti-democratic propaganda.

It's tempting to say that all of this skepticism comes from my online bubble, but these are all examples of intrusions happening "offline." How many times have you heard of tech CEOs using LLMs to summarize messages from their friends and respond in kind? I was curious about this myself several months ago and the experience was horrid. The summaries ended up stripping the messages of crucial context so much so that I ended up reading the actual messages themselves after reading the summary. Efficiency!

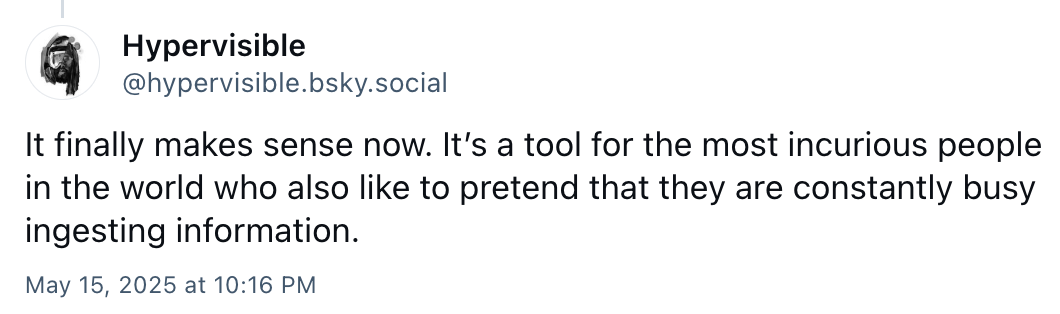

Ghouls like Zuckerberg and Nadella want to introduce fake friends into your lives and replace you with these glorified chatbots because they're deeply lonely and don't view human relationships and everything they contain as being fundamentally worthwhile. They want to make human relationships efficient and productive, even going so far as to recommend outsourcing parenting to their bots. I don't know about you, but this sounds deeply weird and deeply depressing to me. Which is probably why I keep coming back to this:

Unbelievably scathing, but absolutely hits the nail on the head.

If you're at all interested in learning more about everything I've touched on above, Ed Zitron's excellent article, "The Era of the Business Idiot" is an absolute must read. I will warn you, it's long and full of links that will send you down all sorts of rabbit holes, but stick with it to the end. Zitron has spent infinitely more time than I have just thinking about all of this and subsequently, does an incredible job at tying all these seemingly disparate pieces together. And when you're done reading that, watch this clip of Ken Jennings, this fantastic video essay by Taylor Lorenz, and read this article on refusing tech fascism by Tante. And if after all that, you still want more, here're some solid book recommendations:

More Everything Forever by Adam Becker

Empire of AI by Karen Hao

Quick Updates:

A little over a year ago, I wrote two articles that I wanted to quickly follow-up on. The first was about AI's reputation problem, and in that I briefly mentioned a deeply weird and deeply disturbing cult emerging in tech called TESCREAL. This video is a fantastic way to understand what it is, and exactly how and why it's a deeply dumb problem.

Second, was when I wrote about how the Kindle was Almost Perfect. Three years and a new product cycle later, and not much has changed. When my Paperwhite met an unfortunately early demise, I ended up picking the Kobo Clara BW to replace it. It's smaller and lighter, has all the same key features of the Paperwhite but at half the cost, and goes beyond that with Overdrive integration and it lets you manage your books with an easy drag-and-drop file system. And to top it all off, they're Canadian. So if you're in the market for a new e-reader, don't buy Amazon because all you'll get is a more expensive, less capable device filled with hidden ads at nearly double the price.

Find me online over on Bluesky (@tapaseaswar.com) or on the Fediverse (@tapas@tapaseaswar.com)!